Netflix just published clear rules for using generative AI on productions. The headline idea: You can use AI to help you think and mock up. Once it appears in the final cut, Netflix wants extra care and often written approval.

To support global productions and stay aligned with best practices, we expect all production partners to share any intended use of GenAI with their Netflix contact, especially as new tools continue to emerge with different capabilities and risks.

– Netflix

What Netflix actually said

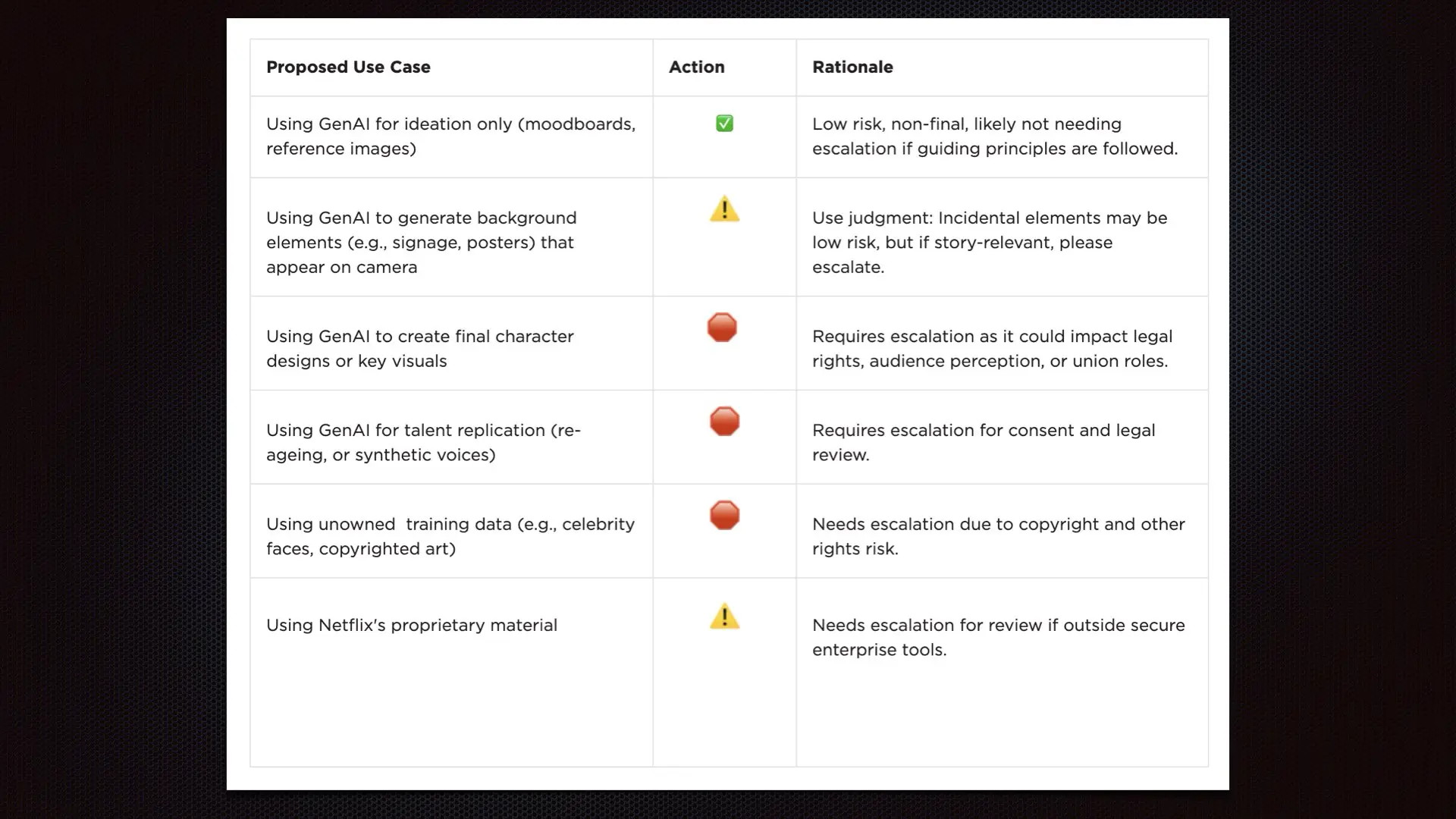

Netflix asks every production partner to tell their Netflix contact about any planned AI use. Low risk uses that follow the rules usually do not need legal review. If AI outputs appear in final deliverables, or touch talent likeness, personal data, or third-party IP, then you need written approval before moving forward.

Most low-risk use cases that follow the guiding principles below are unlikely to require legal review. However, if the output includes final deliverables, talent likeness, personal data, or third-party IP, written approval will be required before you proceed.

– Netflix

The 5 guiding principles in simple words

-

Do not copy protected material. Avoid outputs that replicate copyrighted or unowned content.

-

Keep inputs safe. Use tools that do not store, reuse, or train on your production data.

-

Prefer secured enterprise environments when possible.

-

Treat AI images, audio, and text as temporary. They should not be in final deliverables unless reviewed and cleared.

-

Respect labor and talent. Do not replace union-covered work or generate new talent performances without consent.

AI-generated content must be used with care, especially when it forms a visible or story-critical part of the production. Whether you’re designing a world, a character, or artwork that appears in a scene, the same creative and legal standards apply as with traditionally produced assets.

– Netflix

Final Cut vs. Temporary Media

If AI-generated elements appear on screen or in the soundtrack, even as background signage or a document, flag them early for legal guidance. Story relevant or prominent pieces require greater care and may need rights clearance. Mock up with AI if you like, but final versions should include meaningful human input and follow review.

Audiences should be able to trust what they see and hear on screen. GenAI (if used without care) can blur the line between fiction and reality or unintentionally mislead viewers. That’s why we ask you to consider both the intent and the impact of your AI-generated content.

– Netflix

What usually needs written approval

- Data use: Do not feed proprietary assets or personal data into tools without explicit approval. Never train on unowned material or another artist’s work.

- Creative output: Do not generate key story elements such as main characters or signature visuals without approval. Avoid prompts that reference public figures or copyrighted materials.

- Talent and performance: Digital replicas and significant performance changes demand consent and legal review.

- Ethics and representation: Do not mislead audiences or blur fact and fiction in ways that could be mistaken for real events. Respect union roles.

When not using enterprise tools, ensure that any AI tools, plugins, or workflows you use do not train on inputs or outputs, as using the wrong license tier or missing pre-negotiated data terms could compromise confidentiality. You are responsible for reviewing the terms and conditions (T&Cs). Please check with your Netflix contact if you have any further questions.

– Netflix

Digital Replicas. Voice. Consent

Creating a recognizable digital voice or likeness requires documented consent. After consent is granted, additional consent is not required when the output stays within the approved script, covers safety only actions, or leaves the performer unrecognizable. Visual or voice changes that could alter meaning should be handled with caution and usually reviewed.

Security and confidentiality

Prefer Netflix-approved or enterprise-grade tools. Confirm that licenses forbid training on your inputs and protect scripts, production stills, and talent materials. When in doubt, escalate. You are responsible for checking the terms and keeping records.

Fast Checklist For Your Next Shoot

Pre-production.

- Ideation with moodboards and references using AI. Generally low risk when you follow the principles.

- Create lookbooks or rough comps to explore options. Keep these as temporary drafts.

On set and post.

- Background signage or props made with AI: Use judgment. If the item is story relevant or clearly visible, escalate.

- Final character designs, key visuals, or any talent replication: Escalate and expect written approval.

- Any training on unowned data or use of proprietary production materials: Do not proceed without clearance.

- Keep a log of tools, prompts, and outputs: Note who reviewed and approved each use.

What Filmmakers Should Take From This

Use AI as a thinking partner and a rapid prototyping assistant. Keep draft outputs out of the final picture and sound unless you have cleared legal and talent steps. Protect your inputs. document your process, and loop in your Netflix contact early. This keeps your production safe, protects talent, and builds trust with viewers. Bottom line: Netflix encourages AI for brainstorming and temp comps, but wants what reaches the final picture and sound to be human guided and properly cleared. Where would you draw the line between AI assist and on screen use? Which steps in your pipeline should stay purely human, and why? If you were producing today, what AI tasks would you greenlight that never touch the final frame? Does this policy protect artists or slow innovation in practice? How would you log AI use on set in a way busy crews can actually follow? Which role benefits most right now, art department, previsualization, or post? What is your red line for generative AI in cinema? As you can see, there are tons of questions about these guidelines.