The next (and insane) generation of AI-generated imagery has been announced, and now it aims to create videos. Meet Sora. Developed by OpenAI, and released for testers only, this technology can create a cinematic blockbuster-look video from a short text prompt. It’s amazing and too good to be true. However, it’s true. Should filmmakers be worried? Is it a game over for filmmakers? Well, basically not. Read on.

Sora: Create a cinematic video from a text prompt

This was very much anticipated. However, we didn’t think it would come so soon, and it will look so good. OpenAI has just introduced Sora, which is a text-to-video model. Sora can generate videos up to a minute long while maintaining visual quality and adherence to the user’s prompt. Yep, someone with no skill, creativity, or passion can make videos that the rest of us have worked our entire careers to learn how to make them. That’s a thought. But, there’s a bright side as well. First, let’s understand what Sora does. BTW, in this article, you can find the 33 videos released so far by OpenAI that demonstrate Sora’s abilities. These videos are made by testers, as Sora is not released to the public (even not in Beta). We discuss later why. Each video shows the text prompt at the beginning, and the 10-20 sec video is generated. So read, watch, and enjoy.

The definition of Sora

As stated by OpenAI: “Sora can generate complex scenes with multiple characters, specific types of motion, and accurate details of the subject and background. The model understands not only what the user has asked for in the prompt, but also how those things exist in the physical world. The model has a deep understanding of language, enabling it to accurately interpret prompts and generate compelling characters that express vibrant emotions. Sora can also create multiple shots within a single generated video that accurately persist characters and visual style”.

Sora can generate complex scenes with multiple characters, specific types of motion, and accurate details of the subject and background. The model understands not only what the user has asked for in the prompt, but also how those things exist in the physical world.

OpenAI

Basically, Sora makes us believe that AI-generated movies are possible. “Other, more immediate use cases could include B-roll / stock video, video ads, and video editing—all using AI!” say the AI enthusiasts. Furthermore, Sora doesn’t just do text-to-video. It can also bring still images to life as video, extend videos in either direction and edit videos by altering styles/environments. Merge two videos and more. For now, Sora’s FAQ is very short and straightforward. Explore it below:

- Q: Is Sora available right now to me? A: No, it is not yet widely available.

- Q: Is there a waitlist or API access? A: Not as of Feb 16th, stay tuned!

- Q: How can I get access to Sora? A: Stay tuned! We have not rolled out public access to Sora.

For now, available only for red teamers, and filmmakers(?)

As explained by OpenAI: “Today, Sora is becoming available to red teamers to assess critical areas for harms or risks. We are also granting access to several visual artists, designers, and filmmakers to gain feedback on how to advance the model to be most helpful for creative professionals. We’re sharing our research progress early to start working with and getting feedback from people outside of OpenAI and to give the public a sense of what AI capabilities are on the horizon”. Yes, you heard right. OpenAI wants filmmakers to utilize Sora for their needs. OpenAI thinks that Sora can be beneficial to content creators. However, not everybody agrees with that. For instance, here’s a comment in the OpenAI forum: “I work as a stop motion animator. As a professional animator, I am blown away by the capabilities that Sora seems to demonstrate. I’m intrigued, but also terrified. For the longest time, stop motion animators have feared that CG animators are coming for our jobs. Instead, it now seems that AI is coming for all of the CG jobs and that it’ll likely come to conquer all of the stop-motion jobs shortly after that. I’m interested in lending my unique perspective to OpenAI however I can. I also want to warn OpenAI that Sora seems to have the potential to put a huge number of people in film and animation out of work. Perhaps that comes as no surprise”. That’s one reason OpenAI mentions a few times that this technology is FOR creators and NOT AGAINST them.

OpenAI wants filmmakers to utilize Sora for their needs. OpenAI thinks that Sora can be beneficial to content creators. However, not everybody agrees with that.

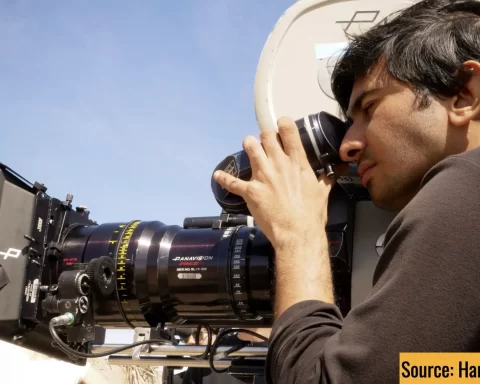

Choose the camera, and format: Bye-bye stock video licensing

Sora allows you to go wild. You can get outstanding realistic and cinematic results. It will kill the stock video market, as there’s no need to pay an expensive license fee for an 8K helicopter shot of a castle in the middle of the Atlantic Ocean. You can write a prompt. It will take you 2 minutes, and the result is yours. You can also choose the camera and format. For instance, the prompts of some of the videos released by OpenAI asked for a 35mm shoot and even a closeup of 70mm film stock. You can write a prompt asking for a ‘Filmed in IMAX film cameras” commercial. You will not need to rent an IMAX 9802 for that.

You can also choose the camera and format. For instance, the prompts of some of the videos released by OpenAI asked for a 35mm shoot and even a closeup of 70mm film stock.

Weaknesses are not relevant

OpenAI elaborated that the current model has weaknesses. It may struggle with accurately simulating the physics of a complex scene, and may not understand specific instances of cause and effect. For example, a person might take a bite out of a cookie, but afterward, the cookie may not have a bite mark. The model may also confuse spatial details of a prompt, for example, mixing up left and right, and may struggle with precise descriptions of events that take place over time, like following a specific camera trajectory. However, as we know the progress of AI, those weaknesses will be reduced or eliminated in the next year. As you can see, there are a lot of artifacts and incorrect physics in the Sora videos. Nevertheless, it will be significantly improved every week. Hence, all those mismatches will be gone in, let’s say, 2026, which is just around the corner.

As you can see, there are a lot of artifacts and incorrect physics in the Sora videos. Nevertheless, it will be significantly improved every week. Hence, all those mismatches will be gone in, let’s say, 2026.

Technology explained: Everything is possible

Sora is a diffusion model, which generates a video by starting with one that looks like a static noise and gradually transforming it by removing the noise over many steps. Sora is capable of generating entire videos all at once or extending generated videos to make them longer. “By giving the model foresight of many frames at a time, we’ve solved a challenging problem of making sure a subject stays the same even when it goes out of view temporarily. We can train diffusion transformers on a wider range of visual data than was possible before, spanning different durations, resolutions, and aspect ratios” says OpenAI.

It’s interesting to note that from our perspective, most of the videos generated so far seem like were shot on 60 FPS (and not 24 FPS). It might be because Sora fills in the missing frames, as explained.

Based on DALL·E 3

Sora builds on past research in DALL·E and GPT models. It uses the recaptioning technique from DALL·E 3, which involves generating highly descriptive captions for the visual training data. As a result, the model can follow the user’s text instructions in the generated video more faithfully. In addition to being able to generate a video solely from text instructions, the model can take an existing still image and generate a video from it, animating the image’s contents with accuracy and attention to small details. The model can also take an existing video and extend it or fill in missing frames. It’s interesting to note that from our perspective, most of the videos generated so far seem like were shot at 60 FPS (and not 24 FPS). It might be because Sora fills in the missing frames, as explained.

The danger is that users will not believe videos anymore.

Safety: Because it becomes dangerous

This is what OpenAI says regarding the Safety and precaution measures taken regarding Sora: “We’ll be taking several important safety steps ahead of making Sora available in OpenAI’s products. We are working with red teamers—domain experts in areas like misinformation, hateful content, and bias—who will be adversarially testing the model. We’re also building tools to help detect misleading content such as a detection classifier that can tell when a video was generated by Sora. We plan to include C2PA metadata in the future if we deploy the model in an OpenAI product”. For example, once in an OpenAI product, the text classifier will check and reject text input prompts that violate our usage policies, like those that request extreme violence, sexual content, hateful imagery, celebrity likeness, or the IP of others. OpenAI also developed robust image classifiers that are used to review the frames of every video generated to help ensure that it adheres to our usage policies before it’s shown to the user. The danger is that users will not believe videos anymore.

You will be able to create a high-end commercial shot on RED and ARRI cameras by writing a piece of text.

Closing thoughts

Sora will kill the stock video licensing market. Also, CGI artists need to recalculate their routes ASAP. Animators are at risk as well. Think about this — You will be able to create a high-end commercial shot on RED and ARRI cameras by writing a piece of text. A few high-quality professional impressive versions of that commercial will be ready in an hour. We creators pay this price by letting these technologies train on our art. It’s our fault. On the other hand, filmmakers can utilize Sora by cutting expenses and unleashing ideas and imagery that were not accessible before. And what about copyright? OpenAI wants that every video created on Sora will be marked, as users know that it’s AI content. Finally, OpenAI emphasizes: “We’ll be engaging policymakers, educators, and artists around the world to understand their concerns and to identify positive use cases for this new technology”. However, it also says: “Despite extensive research and testing, we cannot predict all of the beneficial ways people will use our technology, nor all the ways people will abuse it. That’s why we believe that learning from real-world use is a critical component of creating and releasing increasingly safe AI systems over time”. So what do you think? Is it a game over for filmmakers?

Back in the day, it took a lot of folks to operate a large corn farm. Now, it can be done with 1-3 people thanks to technology. Big high-tech tractors, improved chemicals and other technologies. All sectors gravitate toward efficiency. The Sora AI video is impressive, but some shots have an artificial edge to them. It is ready for the training or educational video market because a realistic look is not always important in that genre. From what I saw, it’s not ready for most docs and live action movies. It may get there in the future so that the only humans a production may require is a director and a producer. Regarding stock footage – I founded StormStock 30 years ago. We specialize in high-end weather and climate footage. We have remained competitive by staying on the leading edge of production technology. We serve only clients who need the very best content, not all of the production market. For at least awhile, there will remain two distinct markets for stock footage. A small high-end, and a large budget market. AI is close to taking a part of the latter.

It’s so depressing. All this tech is going to do is widen the wage gap and harm the creative spirit. Soon a 10 year old will be able to make extremely high quality video with a few text prompts. How can anyone feel proud of their work going forward? I know large production companies that have already been playing around with AI for years and they’re going to blindly jump on tech like the second it becomes widely available. I knew tech would change and evolve greatly, but didn’t think I’d see my career as a cinematographer get potentially wiped out by these horrible AI companies. These are dark times. I feel lost.