The Backstreet Boys’ Into the Millennium residency at the Las Vegas Sphere is the first mainstream deployment of the Sphere’s proprietary Big Sky cinema camera in a live pop concert. This moment represents a cultural and technological milestone that YMCinema defines as Premium Cinematic Broadcasting, the fusion of live music with the most elite cinema imaging systems ever built. From the Wizard of Oz prototype film to U2’s Sphere residency, from NVIDIA-powered post pipelines to cinematic camera orchestras at major sports events, this residency is the culmination of years of experimentation. For the first time, the public is witnessing a pop concert captured and displayed with the same technological gravity as IMAX.

The Road to Premium Cinematic Broadcasting

The term Premium Cinematic Broadcasting has been a recurring theme in YMCinema coverage. It describes the transformation of live concerts, shows, and sports into events captured with cinema-grade tools, surpassing conventional broadcast video. This trend began with experiments using ARRI, RED, and Sony cameras for live shows, such as the Super Bowl halftime production that deployed 12 Sony VENICE cinema cameras to shoot the Super Bowl halftime show and the use of three ARRI AMIRA Live cameras, Fujinon PL Box Lens and Canon PL 25-250 to livestream the World Karate Championships. Over the past 2 years, YMCinema documented a rapid acceleration of this practice. For instance, Sony VENICE 2, BURANO, FR7: A Lethal Combination for Cinematic Broadcasting analyzed how Sony’s lineup was designed not only for narrative filmmaking but also for live broadcasting. Similarly, An Orchestra of Cinema Cameras to Shoot an Orchestra Live Show explored how high-end cameras can elevate live performance into cinematic spectacle. All of these experiments laid the groundwork. But the Sphere’s introduction of the Big Sky camera marks the beginning of a new category, concerts presented not just cinematically, but with an imaging system built from scratch for a 16K dome.

Big Sky: From Patent to Pop Concert

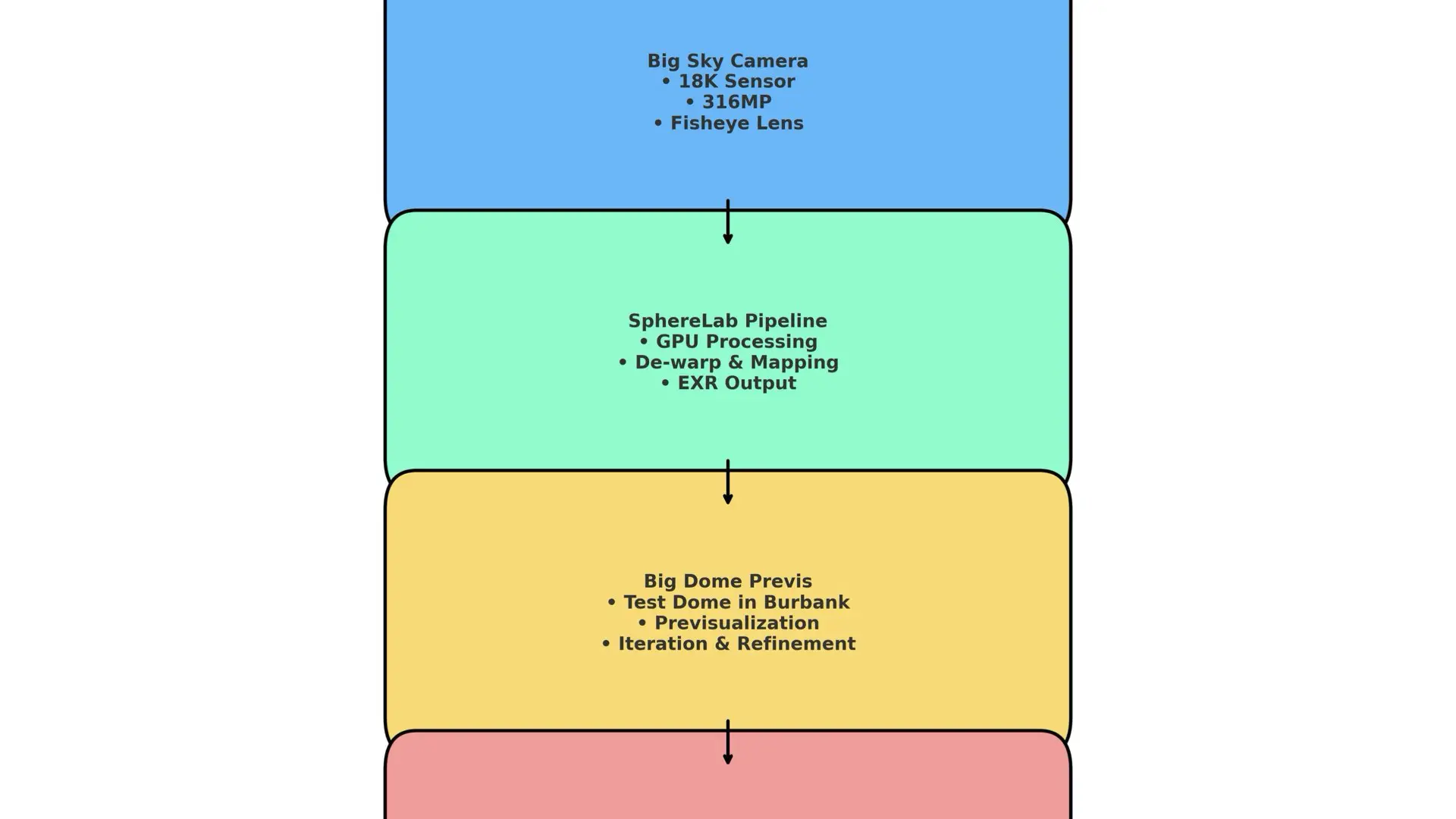

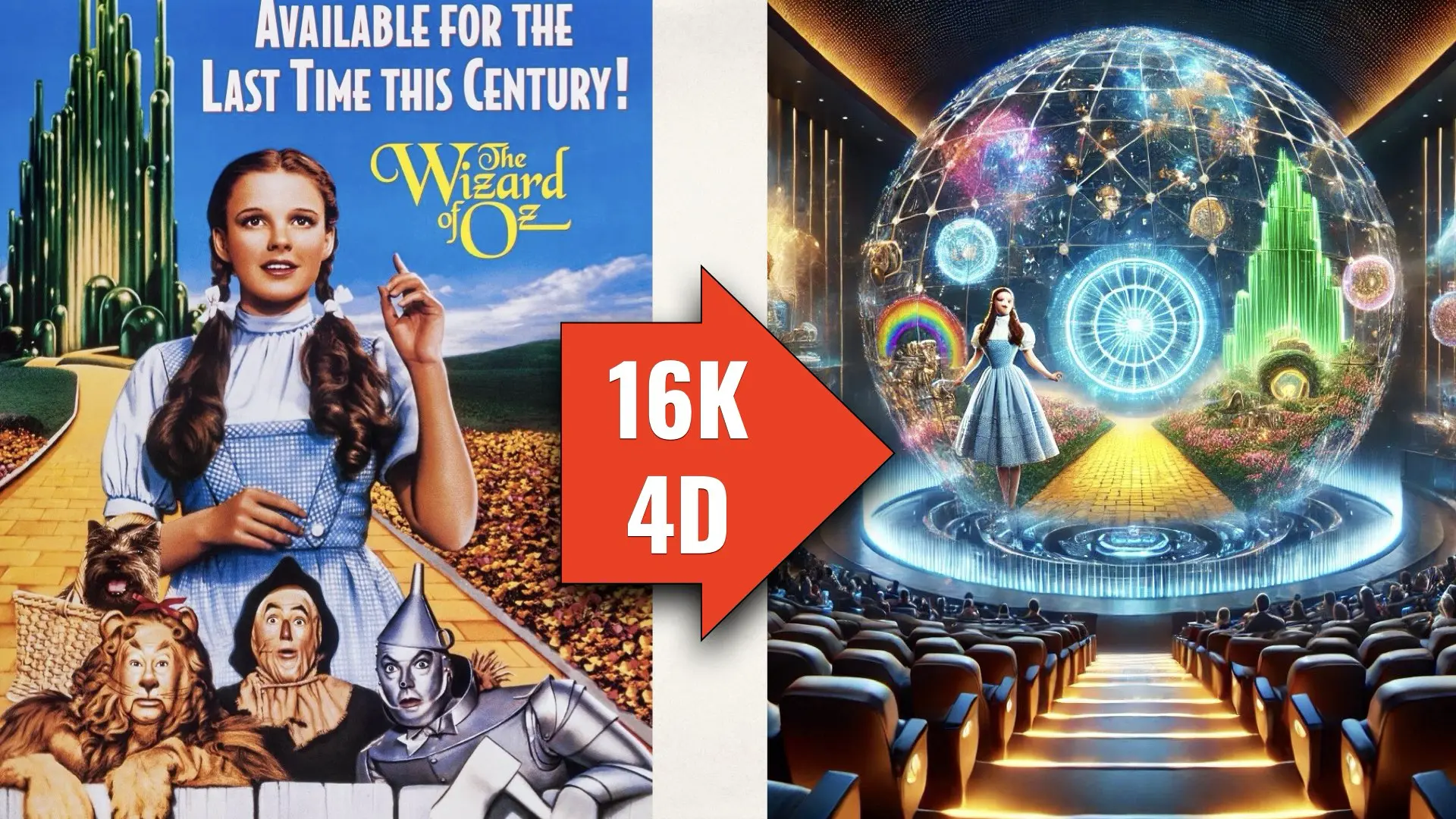

In The Big Sky Cinema Camera: Breaking Down the Patent Behind the Las Vegas Sphere’s Cutting-Edge Imagery, YMCinema analyzed how the Big Sky system emerged from a proprietary sensor design: a 316-MP square-format imager paired with a 12-inch fisheye lens. Unlike stitched arrays of cinema cameras, Big Sky captures a seamless circular image that can be mapped directly onto Sphere’s massive LED dome. The Big Sky pipeline is supported by SphereLab, an in-house GPU accelerated post workflow. 150 NVIDIA RTX A6000 GPUs Power the IMAX on Steroids detailed how this render farm processes Big Sky’s extreme data rate, which reaches 32 gigabits per second as described in Shooting a Movie on a Data Rate of 32 Gigabits Per Second. The first public application of Big Sky was cinematic: ALEXA 65 and Big Sky Cinema Camera to Shoot First Film to the Giant MSG Sphere and later The Wizard of Oz 16K Sphere Project: Reimagining Cinema with Cutting-Edge Technology showed the camera’s potential to redefine narrative cinema. But it was U2’s residency, captured with Big Sky rigs as documented in V-U2: An Immersive Concert Shot on the Big Sky Cinema Camera, that hinted at its future in live concerts. Now, with the Backstreet Boys, Big Sky enters the mainstream.

Siberia: The Cinematic Core of the Show

The Siberia sequence is where Premium Cinematic Broadcasting crystallizes. Reviewers have described how the Backstreet Boys’ faces appear frozen in an arctic landscape projected across the Sphere’s 16K dome. As the song builds, these faces animate, sing, and synchronize with the live performers on stage. This illusion is possible because of the Big Sky’s real-time motion capture and ultra-wide imagery, shot during rehearsals and pre-visualization inside the Burbank Big Dome prototype. Unlike previous cinematic applications, here the camera is not just providing background plates, but actively constructing a narrative world for a live concert, merging digital assets with living performers. The result is closer to IMAX documentary immersion than to a standard residency light show.

From Postcards to Pop

Sphere audiences first experienced the dome’s potential through cinematic showcases such as Sphere’s Postcard from Earth Review: An IMAX Experience on Steroids. That project proved that cinematic spectacle at 16K resolution can leave audiences breathless. But it remained a controlled, curated film. By contrast, the Backstreet Boys residency is messy, unpredictable, and live. The challenge is synchronizing live vocals, choreography, and real-time energy with Big Sky’s ultra-precise capture pipeline. That synchronization is what elevates this from an engineering experiment to Premium Cinematic Broadcasting.

Comparing to Other Cinematic Broadcasts

When YMCinema covered The Making of Anymá’s The End of Genesys at the Las Vegas Sphere: A Groundbreaking Fusion of Music and Technology, it was clear that electronic music could merge with immersive visuals. Yet even that was primarily a performance enhanced by visual content. The Backstreet Boys residency is different. It is nostalgia-driven pop staged as cinematic spectacle, produced with the same seriousness as a Hollywood feature. It demonstrates that Premium Cinematic Broadcasting is not limited to avant-garde acts or elite sports. It can be mainstream.

Tech Deep Dive: The Tools and Methods Behind Backstreet Boys’ Sphere Spectacle

The Into the Millennium residency is a masterclass in technical execution, combining cinema-grade cameras, custom software, motion-control rigs, real-time rendering, and vast playback servers to transform a boy-band concert into what YMCinema defines as Premium Cinematic Broadcasting.

Big Sky: The Cinematic Core

At the heart of the project lies the Big Sky cinema camera, a system built exclusively for Sphere. This 316-megapixel square sensor, designed with STMicroelectronics, records at an 18K resolution with a single fisheye lens nearly a foot across. Unlike traditional multi-camera domes that require stitching, Big Sky delivers one seamless frame that maps directly onto Sphere’s LED interior. This design eliminates seams, perspective breaks, and parallax issues that would shatter the illusion across a 160,000-square-foot surface. The data load is staggering: each capture can push 32 gigabits per second of RAW data. Storage and offload are supported by custom recorders that duplicate footage instantly to protect against corruption. Every second of Big Sky material is irreplaceable, so redundancy is non-negotiable.

SphereLab: The Digital Workshop

Once captured, Big Sky’s output moves into SphereLab, the proprietary GPU-accelerated pipeline that transforms fisheye imagery into dome-ready content. The workflow begins with one-light color passes, allowing quick checks for exposure and tone. From there, SphereLab handles geometric de-warping, mapping, and carving of the content so that every horizon line, face, and motion appears natural from every seat in the house.

For Backstreet Boys, SphereLab was also the playground for building the Siberia sequence. Pre-recorded plates of the band’s faces were processed, frozen into digital ice, and then animated back to life. The mapping precision was critical: any drift between the live singers on stage and their 18K avatars towering above would have destroyed the illusion.

Previsualization at Big Dome

Before anything reached Las Vegas, it was tested at the Big Dome in Burbank, a 100-foot geodesic prototype Sphere used for pre-vis. Here, creative leads could stand inside a scaled-down version of the venue and see how visuals landed in full 360 degrees. For Siberia, the Big Dome allowed the team to choreograph timing, experiment with snow particle systems, and adjust facial animations until they matched live performance energy.

This iterative process mirrors the workflows of IMAX documentary filmmakers: build, test, refine, and only then commit to the big screen.

Real-Time and Pre-Rendered Content

The Backstreet Boys residency blends pre-rendered cinematic plates with real-time live camera integration. For sequences like the countdown to blastoff, visuals were designed weeks in advance using 3D animation and rendered into dome-mapped files. For Siberia, Big Sky captured plates of the performers that were then composited into pre-rendered arctic landscapes.

But not everything was locked. Some visuals were rendered in real time using software such as Notch, Unreal Engine, and TouchDesigner, platforms proven in U2’s residency. Real-time rendering allowed dynamic effects — falling snow, rippling auroras, shifting galaxies — to flex with the show’s tempo.

VFX Tools and Asset Creation

The environments themselves were crafted with high-end VFX tools. Particle systems for snow and ice likely originated in Houdini, while 3D models of mountains and star fields were developed in Cinema 4D or Maya. Compositing platforms such as Nuke and After Effects handled layering and integration, while DaVinci Resolve managed color precision across the dome’s 170 million pixels.

For facial animation, there is strong evidence of motion-control rigs and facial mocap interpolation. Even without official disclosure, the smooth transitions from static “frozen” faces to animated singing avatars suggest tools that blend high-res photographic plates with AI-assisted morphing.

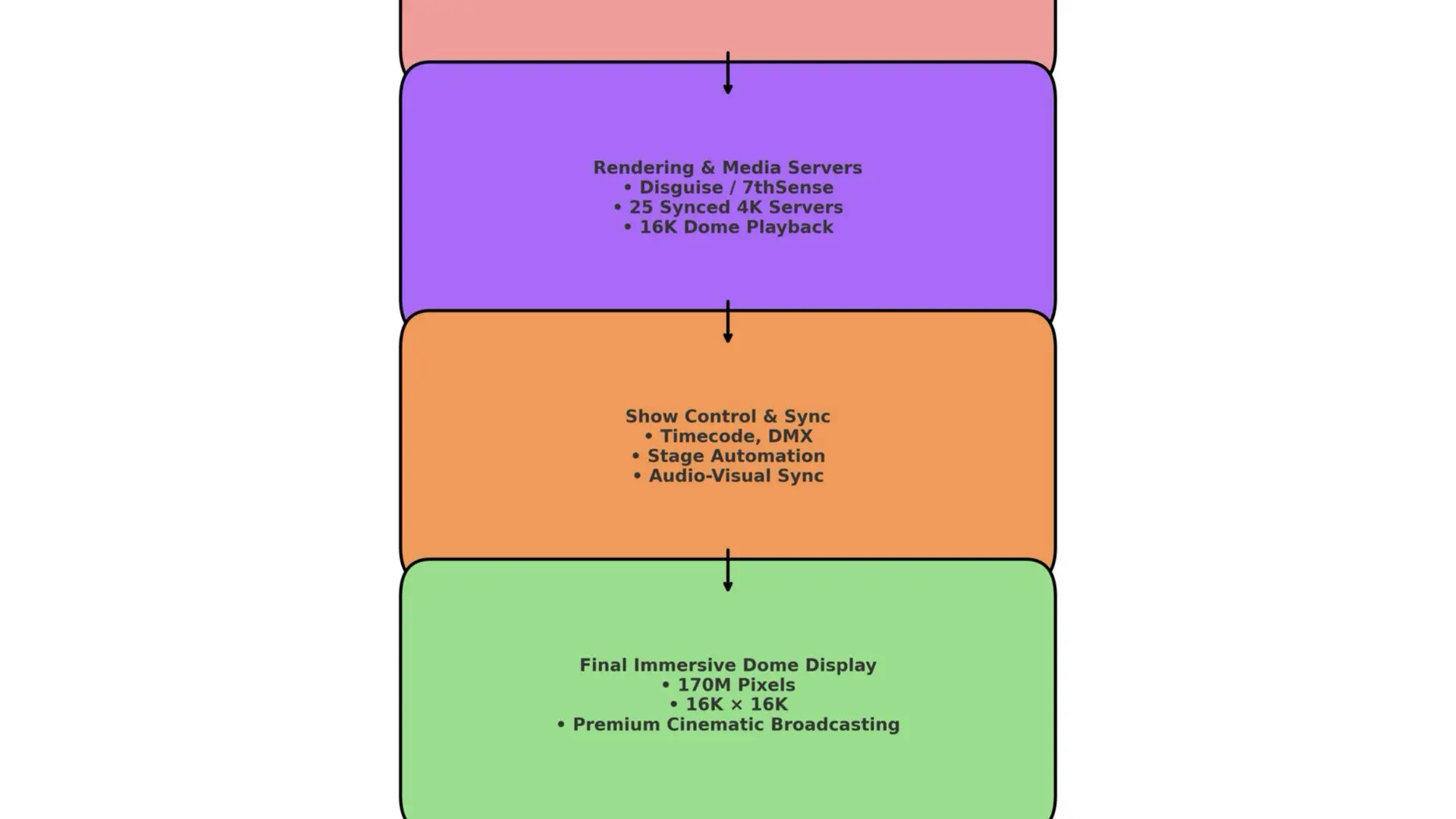

Playback Infrastructure

Sphere’s playback system is its own technical marvel. The dome requires 25 synchronized 4K servers feeding segments of the interior LED wall, all locked to a master clock. Each server handles a slice of the dome’s content, but together they form a seamless 16K × 16K canvas.

Media servers such as 7thSense or Disguise have been used in Sphere productions before, and it is highly likely that Backstreet Boys relied on the same backbone. These servers not only handle playback but also allow operators to cue sequences in sync with lighting, stage automation, and pyrotechnics.

Synchronization and Show Control

Premium Cinematic Broadcasting is not just about high-end imagery. It’s about perfect synchronization between stage and screen. Timecode systems lock visuals to audio tracks. DMX protocols coordinate lighting and stage lifts. Automation systems control floating platforms, ensuring the band’s elevation matches the visual of spacecraft launches or arctic chasms.

For Siberia, timing was especially delicate: the moment a live singer hits a vocal peak had to align with their giant digital face animating above. This required meticulous cue rehearsals and latency testing, reducing system lag to imperceptible levels.

Sound and Visual Convergence

The Sphere is also a sound machine. Its beamforming audio arrays allow specific seats to hear specific mixes, creating a personalized soundtrack. In Siberia, as snow swirls across the dome, whispers of wind pan across the audience. This convergence of sound and image is what elevates the show from multimedia to cinema.

Tools Beyond the Dome

While Big Sky steals headlines, many other cameras were used. Conventional broadcast systems provided IMAG (image magnification) on smaller stage screens. High-end cinema cameras — Sony VENICE, ARRI ALEXA Mini LF — may have been used for capturing rehearsal plates or supplementary content. These cameras do not feed the dome but provide supporting visuals and marketing material.

In effect, the Backstreet Boys residency is a multi-camera orchestra. Big Sky provides the IMAX shot. Traditional cinema cameras provide supporting coverage. Broadcast rigs capture live close-ups. Together, they form a layered ecosystem of imaging tools, each serving a role in Premium Cinematic Broadcasting.

Human Coordination

None of this tech matters without the people. The show demanded directors of photography familiar with dome imaging, VFX supervisors comfortable with 18K workflows, real-time graphics programmers, media server operators, stage automation engineers, and traditional broadcast crews. The scale is closer to a Hollywood feature than a Vegas residency. The coordination is why YMCinema defines this as a watershed moment. A pop concert has never required this many cinema-class disciplines working in unison.

Why This Section Matters

This deep-dive reveals the hidden infrastructure behind what casual fans see as spectacle. For the first time, a mainstream residency fused the Big Sky camera, SphereLab pipeline, Big Dome rehearsal, GPU render farms, VFX suites, real-time rendering engines, synchronized media servers, and stage automation into one cohesive system. This is Premium Cinematic Broadcasting realized: a live event executed with the seriousness, scale, and tools of IMAX filmmaking.

-

Cultural reach: With nearly 350,000 fans attending, this is the largest exposure yet for Big Sky’s capabilities.

-

Technical proof: The residency shows that the 18K capture pipeline is not only viable for controlled film projects but also sustainable for a nightly live residency.

-

Industry precedent: If Backstreet Boys can harness IMAX-level imaging, what prevents future residencies by Beyoncé, Taylor Swift, or Lady Gaga from demanding the same?

-

Economic model: Premium Cinematic Broadcasting could redefine ticket pricing and distribution. Imagine streaming a Sphere show in full 16K fidelity to theaters worldwide, as IMAX events once did.

The IMAX Parallel

IMAX began as an experimental documentary format, capturing large-format imagery for museums and festivals. It took decades before it became mainstream through blockbusters. Sphere’s Big Sky is on a similar trajectory. With Wizard of Oz and Postcard from Earth, it demonstrated pure cinematic potential. With Backstreet Boys, it enters popular culture. This residency is the Apollo 11 moment: the first true mission after years of prototype launches. Premium Cinematic Broadcasting has been theorized, tested, and rehearsed. Now it has been deployed.

Takeaway

The Backstreet Boys’ Into the Millennium show at Sphere is the first real-world demonstration of Premium Cinematic Broadcasting, the convergence of pop performance and elite cinema technology. Using the Big Sky camera, a system capable of capturing 18K imagery at 32 gigabits per second, the residency turns nostalgia into an IMAX-scale experience. YMCinema has documented the road to this moment through The Wizard of Oz 16K Sphere Project: Reimagining Cinema with Cutting-Edge Technology, The Big Sky Cinema Camera: Breaking Down the Patent Behind the Las Vegas Sphere’s Cutting-Edge Imagery, V-U2: An Immersive Concert Shot on the Big Sky Cinema Camera, and many other stories. But this residency proves the point. Premium Cinematic Broadcasting is here. And it looks like the future of live entertainment.