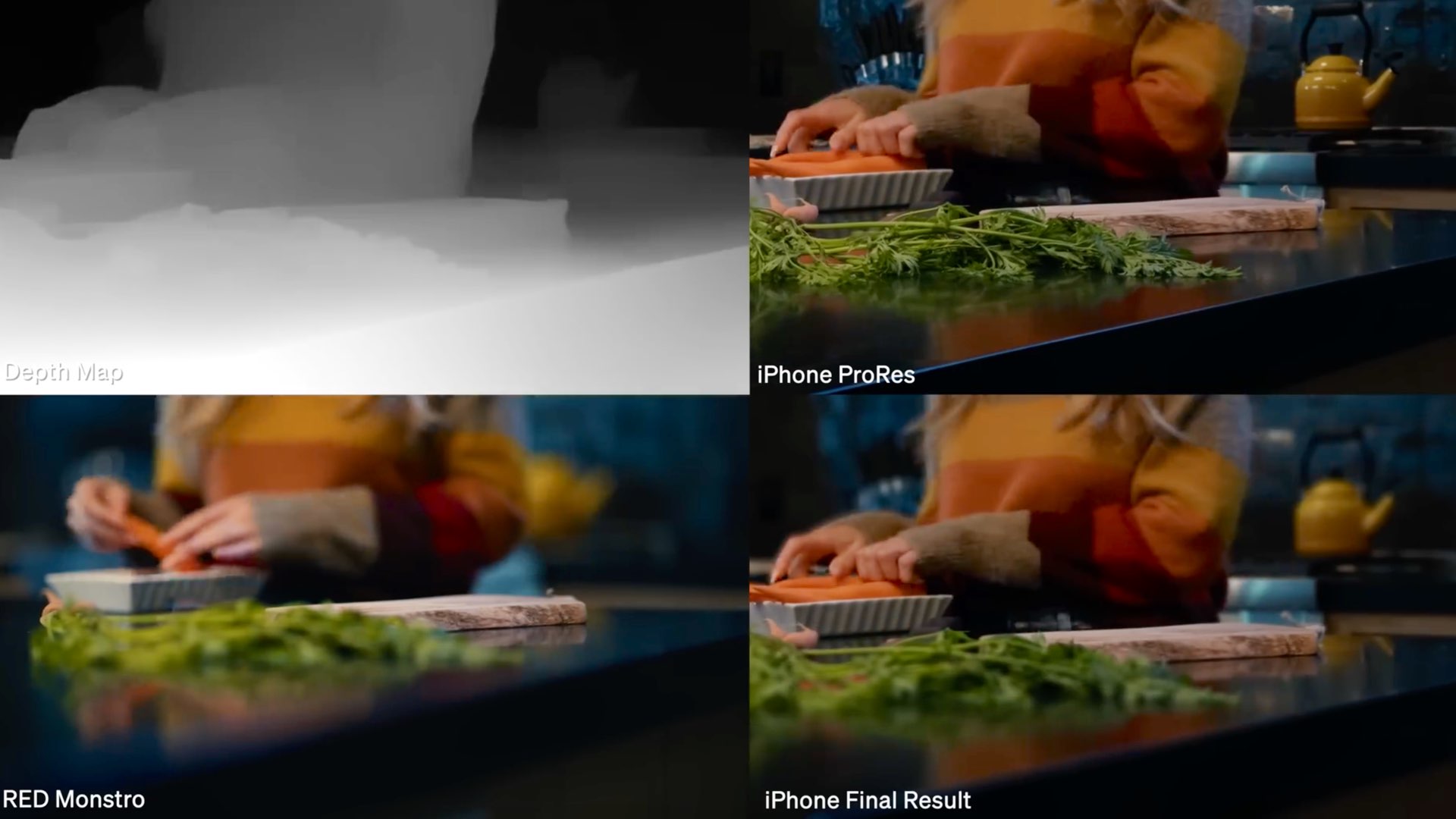

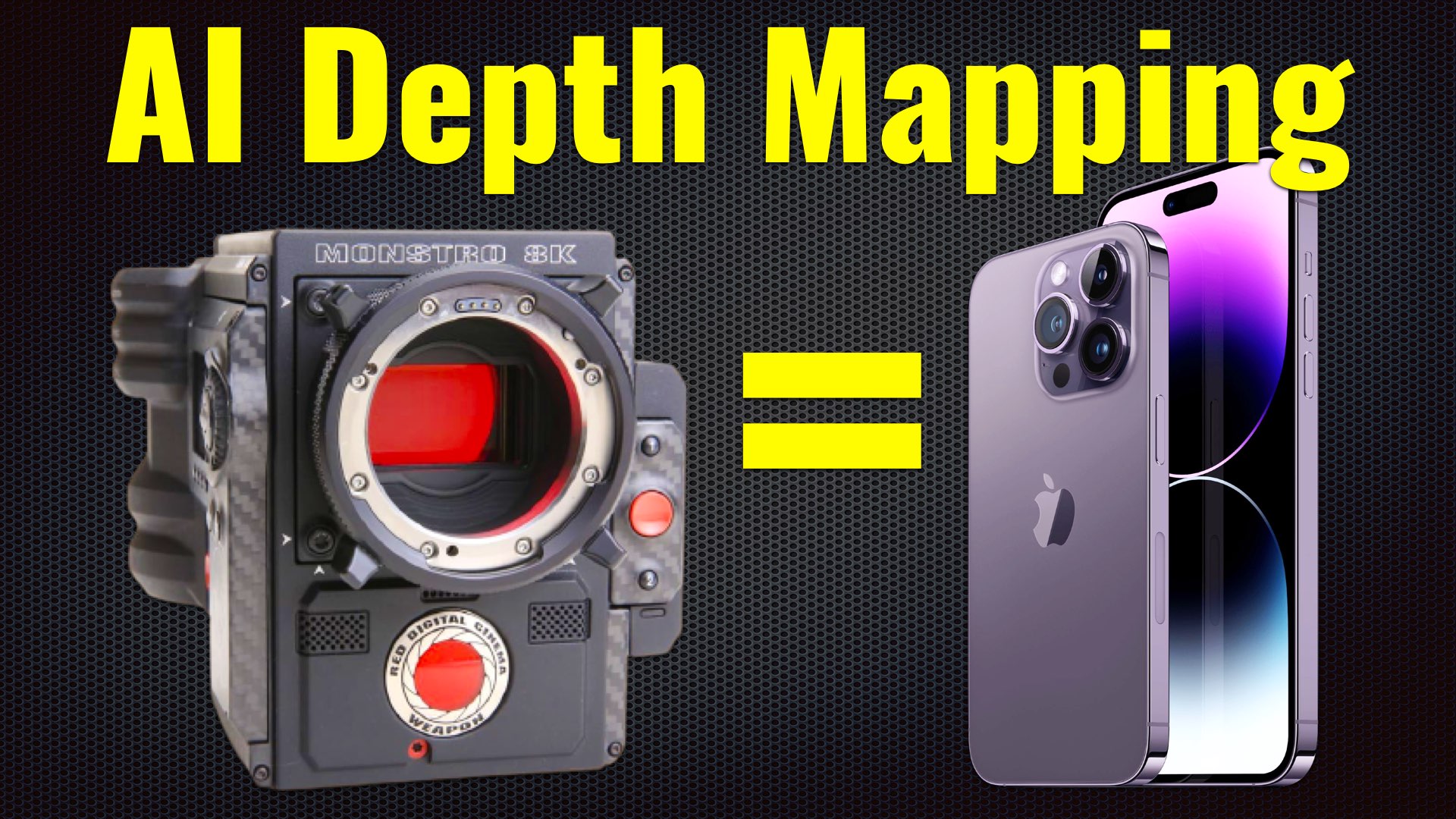

Yes, we know. A lot of you will call this headline a clickbait. But behind it, there’s the thesis that AI technology helps smartphones become closer to high-end cinema cameras. A reference for that is this cool demonstration by Michael Cioni, of an AI Depth Mapping that improves the bokeh in smartphones, by making it more cinematic. Think of it like a cinematic bokeh model for smartphones. Cioni compares the iPhone 15 bokeh to a RED Monstro bokeh. See the shots. You would not know which is which.

The iPhone will be a powerful cinema camera

It seems that Apple is planning something. Building and improving the cinematic capabilities of the iPhone is part of Apple’s roadmap. You all remember Apple’s event shot entirely on the iPhone 15 Pro (plus $70,000 of cinema equipment). Furthermore, there are dedicated apps that transform the iPhone into a filmmaking machine. The best of them is the Blackmagic Camera app. Nowadays, the iPhone can shoot 4K, log, and ProRes. It’s enough specs to make a movie. Even a couple of years ago, Apple granted the elite cinematographer Emanuel Lubezki the honor of making a commercial shot entirely on the iPhone 12 Pro. And the iPhone 15 Pro is much much better in terms of cinematic capabilities. Just think what the iPhone 18 Pro will be capable of. Insane!

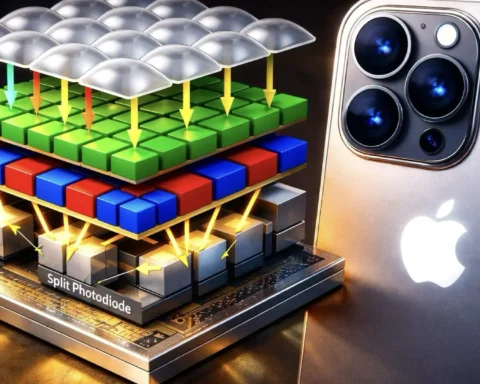

The Cinematic Mode works for Instagram Reels rather than an actual movie. To allow the iPhone to capture an improved bokeh, a plugin called Depth Scanner was developed by blace.ai. This technology can analyze 2D images and convert them to dimensional layers to identify luminance to apply a dimensional blur.

AI Depth Mapping = Improved Cinematic Mode

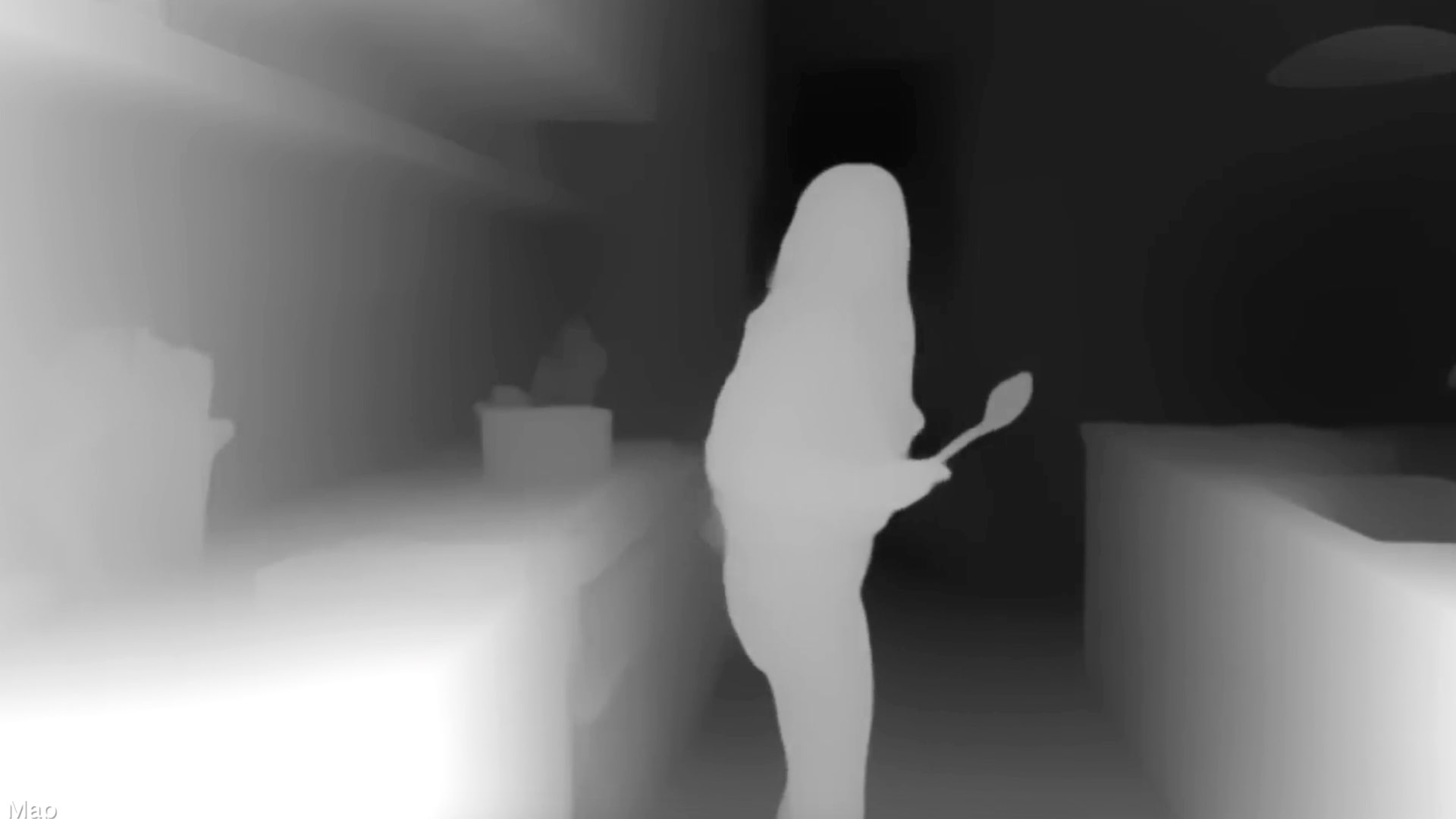

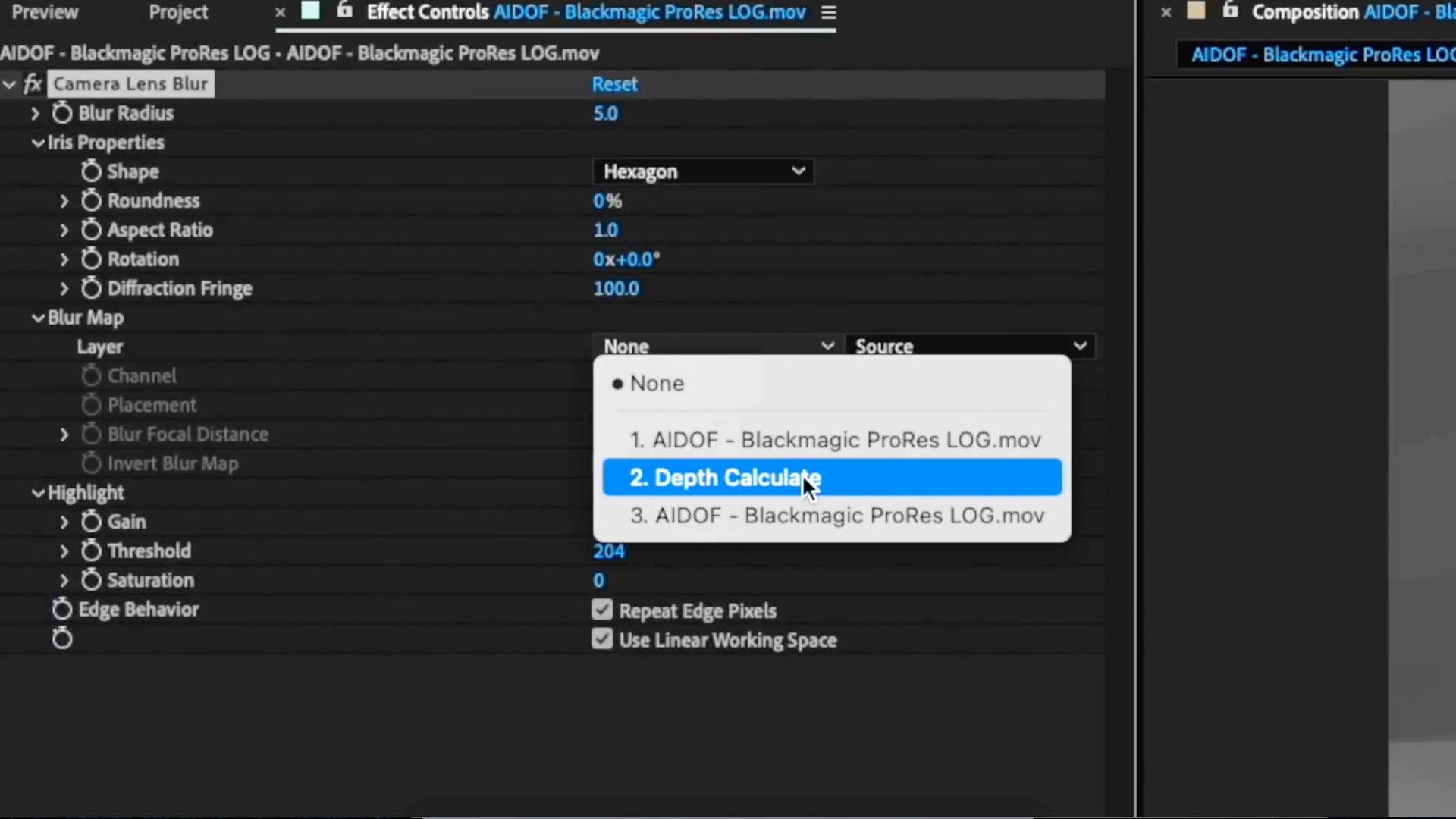

Many filmmakers are using the Cinematic Mode of the iPhone, to achieve a nice bokeh. However, this bokeh is created by Apple Spatial Mapping. You can’t shoot an entire movie with this bokeh, as it has artifacts and looks much less cinematic than a real bokeh. The Cinematic Mode works for Instagram Reels rather than an actual movie. To allow the iPhone to capture an improved bokeh, a plugin called Depth Scanner was developed by blace.ai. This technology can analyze 2D images and convert them to dimensional layers to identify luminance to apply a dimensional blur. According to the demonstration, this plugin can be installed in After Effect. This AI depth mapping can ‘upgrade’ the bokeh in smartphones., and make it more cinematic. You can call it a Bokeh Model for Smartphones.

If we compare the iPhone in Cinema Mode by Apple Spatial Mapping, to the post AI Depth Mapping, we get cleaner lines, more realistic blur, and bokeh properties. These bokeh properties look very similar to the bokeh created by the Vista Vision sensor of the RED Monstro, which is a high-end cinema camera.

How it works: AI analysis, luminance, and bokeh

Thanks to AI analysis, ProRes files from the iPhone can create synthetic DOF by mapping luminance to a percentage of lens blur. Objects in the background are dark, and objects in the foreground are white. The transition between them represents the geometric distance in three dimensions which allows us to apply a dimensional blur in post. If we compare the iPhone in Cinema Mode by Apple Spatial Mapping, to the post AI Depth Mapping, we get cleaner lines, more realistic blur, and bokeh properties. These bokeh properties look very similar to the bokeh created by the Vista Vision sensor of the RED Monstro, which is a high-end cinema camera. As Cioni states: “The point here is not to replicate cinema cameras to people that have them, but to provide cinematic characteristics for the people who don’t”. Check out the demonstration below:

Closing thoughts

At this point, you might be thinking that this demonstration is like a nicely pulled gimmick. However, don’t think about the iPhone 15 Pro. Think about the iPhone 18 Pro armed with Apple Silicon, 12K, ProRes RAW, 12-bit, and so on (let’s imagine). Now — implement that AI tech into that, and you will get something even more powerful than the RED Monstro. Comment your insights below.

Where to start…ProRes Raw isn’t actually raw. R3D is 16 bit, not 12 bit and the pixel pitch on a 12k phone sensor will be tiny, which makes it look less cinematic.

All in all, not even close to a Red Monstro or an Alexa mini LF, etc etc. Not to mention the lens factor. Phones can’t replace great lenses.

Will it be better than today’s phones…sure. But beauty and authentic cinema is more than technology.

The video makes it pretty clear in its conclusion that the point of this experiment is NOT to replace industry standard but to make filmmaking accessible to those who are constrained or daunted by the lack of access to equipment