Canon, Sony, and Nikon have decided to join forces in order to fight AI faked imagery. That mission will be accomplished with a “new camera tech” which is a digital signature that constitutes proof that a specific imagery is real and not AI-generated. The technology will be implemented on flagship mirrorless cameras and will include video as well.

Canon, Sony, and Nikon fight AI

As stated by Nikkei Asia: “Nikon, Sony Group and Canon are developing camera technology that embeds digital signatures in images so that they can be distinguished from increasingly sophisticated fakes”. According to the report: “Such efforts come as ever-more-realistic fakes appear, testing the judgment of content producers and users alike. Deepfakes of former U.S. President Donald Trump and Japanese Prime Minister Fumio Kishida went viral this year”. The new technology will be implemented in future firmware updates during this year. Not sure if this tech will be free of charge though. It’s important to note that the capacity for creating fake images is growing. For instance, Nikkei states that researchers from China’s Tsinghua University proposed in October a new generative AI technology called a latent consistency model, which can produce roughly 700,000 images daily. Thus, technology companies are taking steps to fight the spread of fake content. Indeed, AI-generated imagery is dramatically affecting the filmmaking industry. Here are a few articles we wrote about it:

- DaVinci Resolve 18.6: TensorRT Elevates AI Performance by 50%

- IMAX CEO: “We use AI to blowup images”

- The ‘Inevitable’ Future of AI Filmmaking

- Adobe Firefly has Generated (Unfortunately) Over 1 Billion Images Since Launch

- Director Joe Russo: AI Will Engineer Storytelling

- AI Prompt-Based NLEs Will Kill Editing As We Know It

- Midjourney Is Being Class-Action Sued for Severe Copyright Infringements

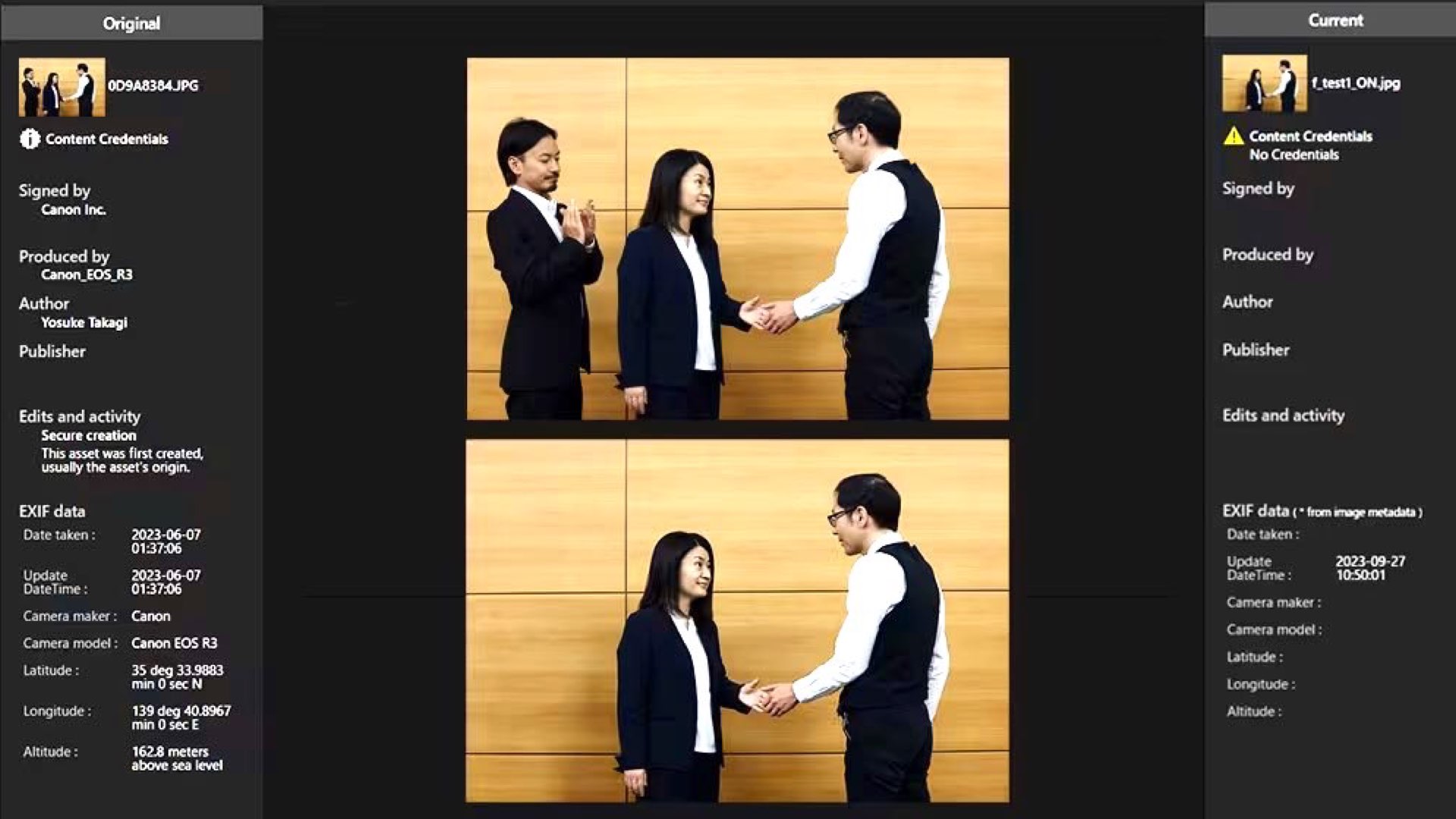

Verify: A new anti-AI tool

Nikkei says, “An alliance of global news organizations, technology companies, and camera makers has launched a web-based tool called Verify for checking images free of charge. If an image has a digital signature, the site displays the date, location, and other credentials. The digital signatures now share a global standard used by Nikon, Sony, and Canon. Japanese companies control around 90% of the global camera market” Hence If an image has been created with artificial intelligence or tampered with, the Verify tool flags it as having ‘No Content Credentials’. Unfortunately, we haven’t found any info regarding this anti-AI tool, which most probably will be cloud-based.

Focusing on high-end mirrorless cameras

It appears that Sony will release in the spring of 2024 technology to incorporate digital signatures into three professional-grade mirrorless SLR cameras via a firmware update (the cameras have not been mentioned). Furthermore, Sony is considering making the technology compatible with videos as well. That would be a real challenge, as AI-generated videos are becoming more and more popular. This field must be attacked. Additionally, Sony will expand its lineup of compatible camera models and lobby other media outlets to adopt the technology. As for Canon, it will release a camera with similar features as early as 2024. The company is also developing technology that adds digital signatures to video. Nikon will offer mirrorless cameras with authentication technology for photojournalists and other professionals. The tamper-resistant digital signatures will include such information as date, time, location, and photographer.

How will it work? Server-side

When a photographer sends images to a news organization, Sony’s authentication servers detect digital signatures and determine whether they are AI-generated. Nikkei reports that Sony and The Associated Press field-tested this tool In October. Furthermore, Canon put together a project team in 2019 and has formed a development tie-up with Thomson Reuters and the Starling Lab for Data Integrity, an institute co-founded by Stanford University and the University of Southern California. In addition, Canon is releasing an image management app to tell whether images are taken by humans.

The war against AI-generated imagery

Google in August released a tool that embeds invisible digital watermarks into AI-generated pictures. In 2022, Intel developed technology to determine whether an image is authentic by analyzing skin color changes that indicate blood flow under subjects’ skin. Hitachi is developing fake-proofing technology for online identity authentication. And now, camera companies are joining the war against AI-generated imagery. We hope that soon, AI videos will be marked as a ‘not-human’ art. That would help to preserve the artists who invest tons of work in their original art forms.

Watch the article: