Apple’s brand new patent application US 20260006927 A1, titled Image-sensing Array With Autofocus Pixels And Enhanced Resolution, focuses on a problem filmmakers run into all the time, even when they do not name it directly. Autofocus can be fast, or footage can look clean and sharp, but achieving both at once is harder than it should be. This patent describes a sensor design that aims to keep autofocus reliable while protecting the parts of the image that carry most of the visible detail.

Stability first, then focus

A useful way to think about this is stability first, then focus, then image reconstruction. Apple has already explored the stability side in Apple iPhone Sensor-Shift Stable Focus Handheld Video, where the goal is to keep handheld footage calmer and reduce the kind of micro motion that makes focus look nervous. US 20260006927 A1 fits naturally next to that, because it tries to make the autofocus system itself less damaging to image sharpness. Apple starts from a basic truth about modern color sensors. Most sensors use a Bayer-style color pattern where green is sampled more than red or blue. That matters because green carries most of the brightness information the camera uses to build detail. In plain English, green pixels are where most of the sharpness comes from. The patent explains that when phase detection autofocus pixels sit inside green regions, they can slightly reduce resolution there, because the optical structures used for autofocus can introduce small local blur. For filmmakers, that blur is not just a still photo issue. It can show up as a soft look in fine textures, skin detail, hair, fabric, and distant edges, especially during motion and focus transitions.

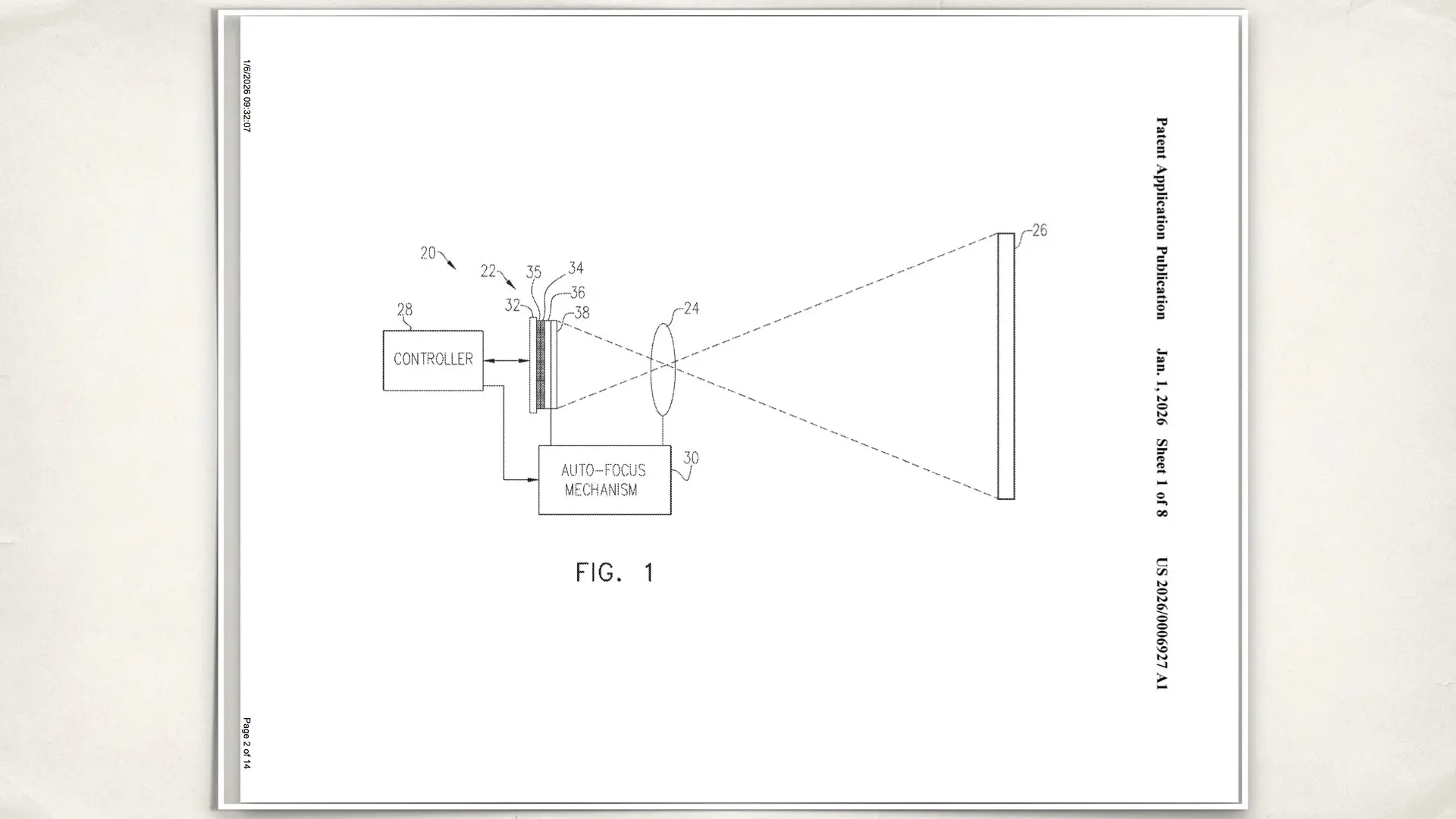

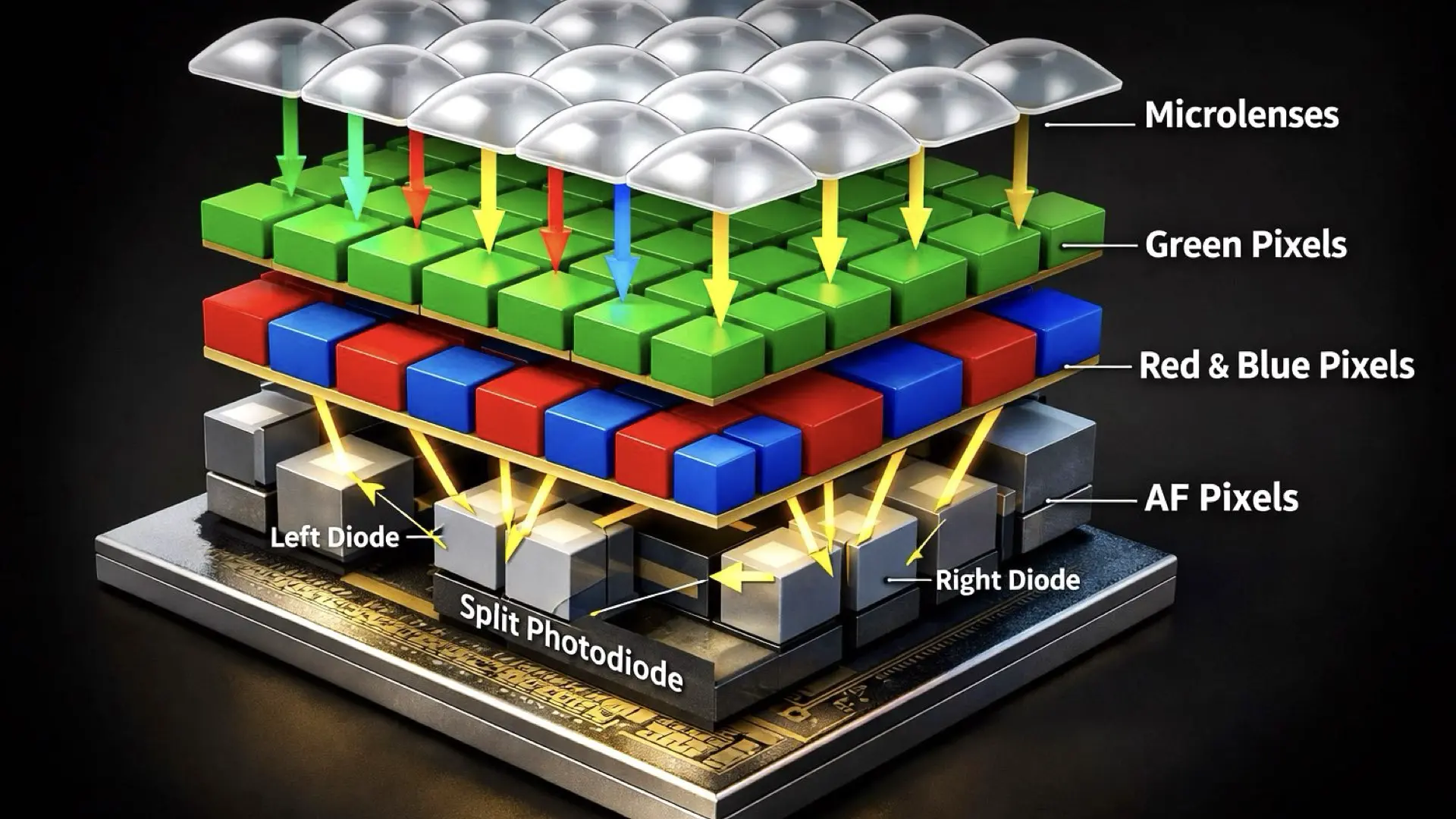

Split pixel design

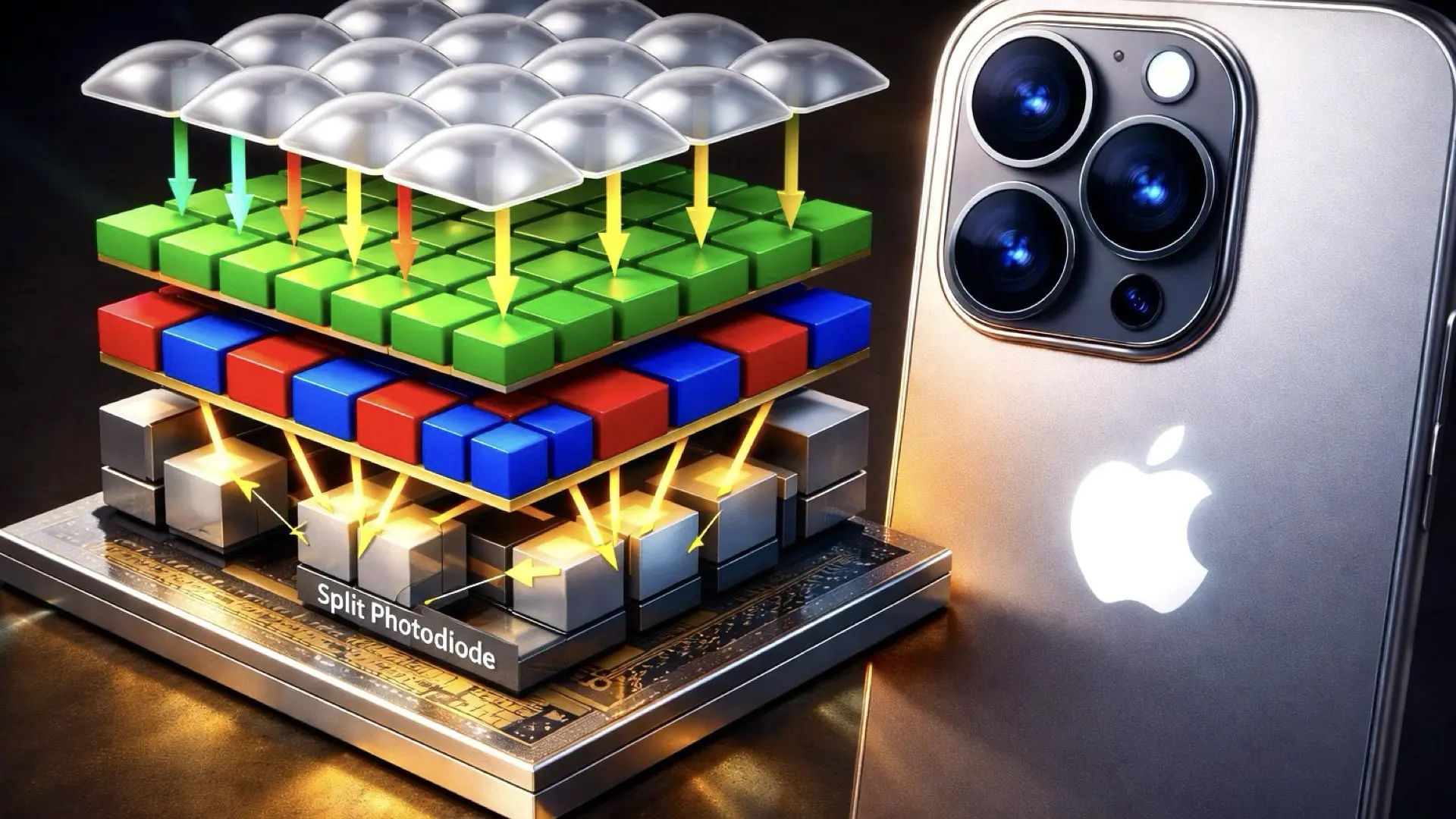

This is where Apple’s approach becomes very direct. The patent proposes a split pixel design where the sensor is built from pairs of photodetectors. The readout circuits can operate in a mode where each photodetector is read separately for maximum detail, and a second mode where the two are binned together for higher sensitivity. That binning matters for filmmakers because low-light video is usually where phones struggle, and binning is a classic way to improve signal quality when the scene is dark. The same paired structure can also be used for autofocus by comparing the two signals, which is the phase detection concept in a practical form. The key choice in this patent is where Apple places the autofocus-capable pairs. Apple describes putting the phase detection autofocus pairs under red and blue color filter tiles, and leaving green areas focused on resolution. That means red and blue pairs can use shared microlenses that support phase detection, while green pairs can use individual microlenses over each photodetector to preserve sharpness. Apple is effectively saying that autofocus should live where it is least likely to harm perceived detail, because the green channel is the core of spatial resolution in most reconstruction pipelines.

Advantages for filmmakers

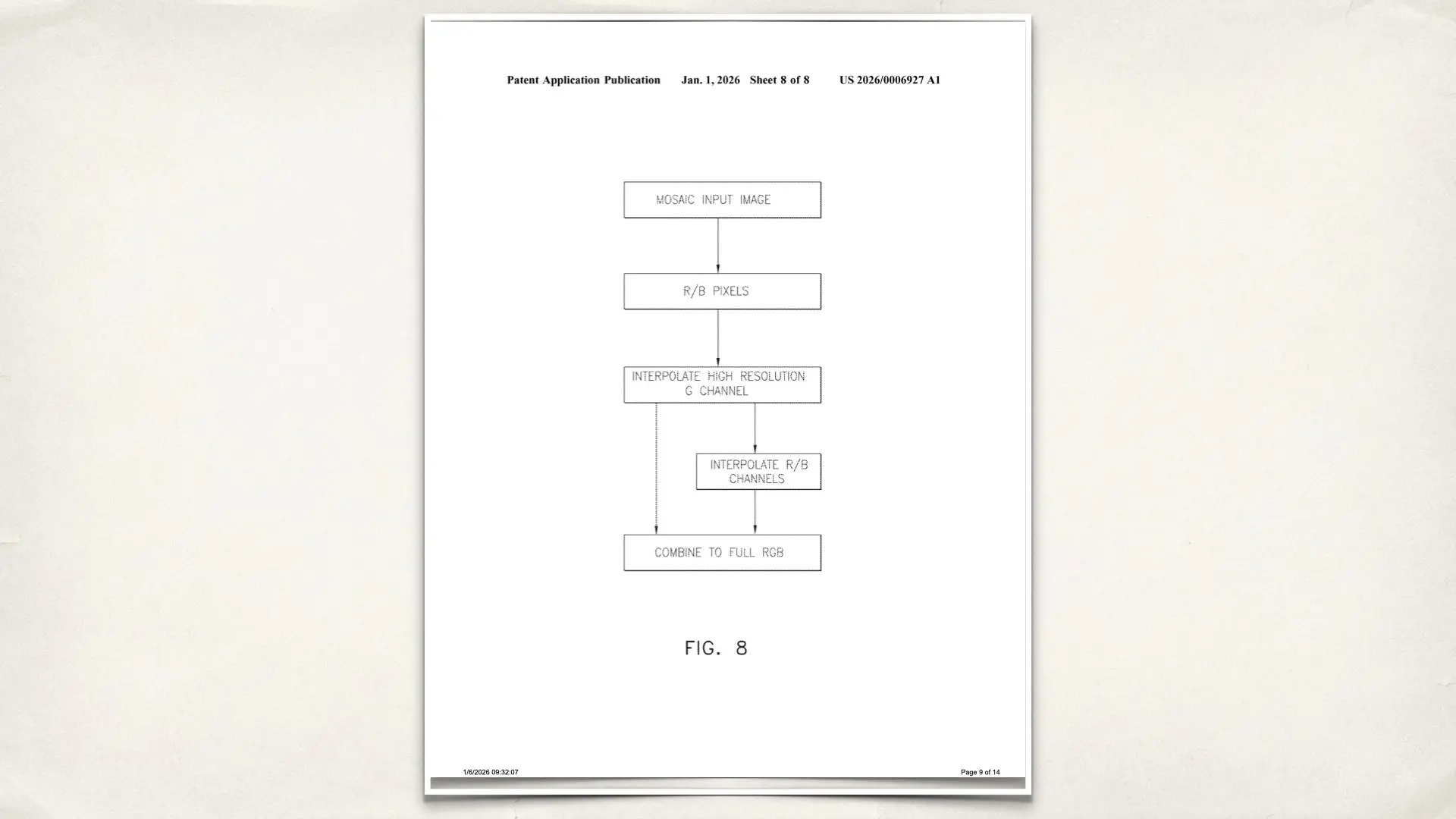

Autofocus systems can introduce a subtle softness during tracking because the sensor is doing more than simply capturing clean luminance data. When the autofocus architecture overlaps with the channel that carries most detail, the footage may look less crisp even when focus is correct. Apple’s design aims to reduce that compromise by keeping the green clean. If a future iPhone sensor follows this blueprint, focus transitions could look more stable and edges could hold texture better while the camera is actively judging focus. The patent also explains how the camera could rebuild full color from this mosaic. It describes computing a high-resolution green image first, then interpolating red and blue using the green image as a guide. That is an important point for filmmakers because most of what makes video look detailed is not color saturation. It is the stability of luminance detail over time. When green is cleaner, the camera has a stronger foundation to build stable frames with fewer artificial sharpening artifacts, fewer temporal inconsistencies, and a more natural texture.

Apple’s strategy

This sensor idea also fits into a larger Apple direction that is very relevant to filmmakers who want more control from small cameras. Apple has explored more ambitious camera module concepts in Apple Modular Camera System Interchangeable Lenses Patent, which points toward a future where optics can become more intentional, not just a fixed phone lens with computational correction. If Apple is thinking seriously about optics and modularity, then improving the underlying autofocus and resolution tradeoffs at the sensor level is a logical prerequisite. It is also important to be clear about what this patent does not claim. It does not mention readout speed directly, and it is not describing a global shutter or a rolling shutter fix. That said, Apple’s work on motion artifacts is clearly present elsewhere, and the best comparison is Apple Global Shutter iPhone Sensor. Global shutter aims to solve motion skew and warping by capturing the frame differently in time. US 20260006927 A1 solves a different filmmaker problem, which is how to gather autofocus information without degrading the channel that makes frames look sharp. These are complementary directions, not competing ones.

Autofocus to be less intrusive

In real filmmaking conditions, autofocus struggles usually appear in 3 places. The first is low light, where phase detection can become noisy and focus begins to hunt. The second is motion, where tracking has to be fast but also visually calm. The third is fine texture, where any softness introduced by the sensor design becomes noticeable once the footage is graded or sharpened. Apple’s patent is aimed at that third problem directly, and it supports the first 2 problems indirectly by improving the quality and reliability of the data the autofocus system reads. The most practical way to describe the promise of US 20260006927 A1 is that it tries to make autofocus less intrusive. Instead of letting autofocus architecture touch the most important detail pixels, it pushes that work into red and blue areas. If Apple implements this well, filmmakers could get footage that holds detail better during focus pulls and tracking shots, especially in handheld work where the subject distance changes constantly.

Takeaway

US 20260006927 A1’s goal is to remove a hidden quality penalty that comes with modern autofocus. Apple’s approach is to protect green resolution while still extracting phase detection autofocus data. For filmmakers, that could mean sharper-looking video during motion, more natural textures, and fewer moments where autofocus success still looks like image softness.

I just want to see all these innovations actually make it into an iPhone.

Haven’t seen any meaningful innovations since Apple log.